In a previous post, we introduced our blog series on Kubernetes security fundamentals. Component API security is the first topic we'll cover in this series. Kubernetes is made up of a number of components, which present APIs that can be called remotely.

As they're the entry point for users. ensuring that these APIs are properly secured is an important part of overall cluster security. In this post, we'll take a look at what ports are available, what their purpose is, and some of the risks of exposing them.

Kubernetes components and ports

The set of ports available to cluster operators will vary based on whether you're using unmanaged or managed Kubernetes. As such, it makes sense to discuss these cases separately in this post.

Unmanaged Kubernetes

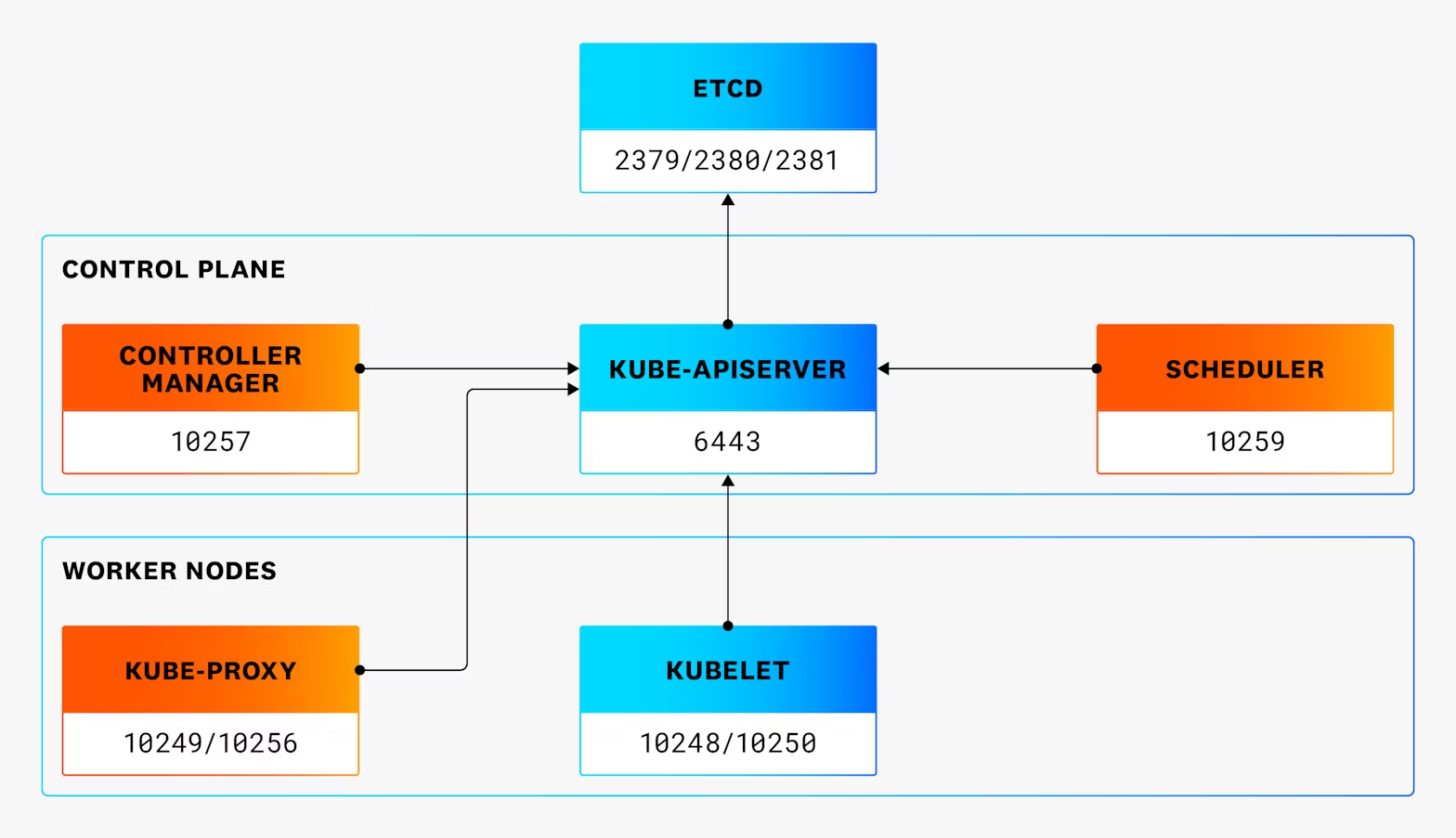

If we use kubeadm as the canonical example of unmanaged Kubernetes, the default set of services and ports looks like the following diagram.

When we're thinking about possible security implications of exposed network ports, it's important to understand the purpose of each one, and what the default settings are (e.g., visibility on the network and whether endpoints are available without authentication).

One slight complication is that, while the functionality of kube-apiserver and etcd server are well documented, the functionality of other APIs is less well known, so you may need to consult the Kubernetes source code to discover relevant information.

Exposed ports in unmanaged Kubernetes

As shown in the previous diagram, various Kubernetes components expose ports for different purposes. Next, we'll cover the default settings of these ports in more detail, broken down by component.

Port 2379/TCP (etcd): This is the main port that client systems use to connect to etcd. In a Kubernetes cluster, it's typically used by kube-apiserver. It listens on the main network interface(s) of the host and requires client certificate authentication to access.

Port 2380/TCP (etcd): This port is used for peer communication when a cluster includes multiple instances of etcd. It listens on the main network interface(s) of the host and requires client certificate authentication to access.

Port 2381/TCP (etcd): In kubeadm, this port is used for etcd metrics. It listens only on localhost, but does not require authentication or encryption on connection.

Port 6443/TCP (kube-apiserver): This is the main—and only—port that kube-apiserver exposes by default in kubeadm. It listens on all network interfaces on the host. It allows access to some paths (e.g., /version, /healthz) without authentication and requires authentication for the rest.

Port 10257/TCP (kube-controller-manager): This port serves up several endpoints for configuration and debugging information about the Kubernetes controller manager. It listens on the localhost interface of the control plane node(s) it's running on. Apart from the /healthz endpoint (which is used for health checks), it requires authentication.

Port 10259/TCP (kube-scheduler): This port serves up endpoints for metrics and configuration information about the Kubernetes scheduler. It listens on the localhost interface of the control plane node(s) it's running on. Apart from the /healthz endpoint (which is used for health checks), it requires authentication to use.

Port 10249/TCP (kube-proxy): This port serves up endpoints for metrics and configuration. It listens on the localhost interface of all worker nodes that it runs on. The endpoints are available without authentication.

Port 10256/TCP (kube-proxy): This port only serves up the /healthz endpoint and is available on all interfaces of the hosts it's running on. The endpoint is available without authentication.

Port 10250/TCP (kubelet): This port has a number of endpoints that the API server uses for various tasks, such as executing commands in running containers. It listens on all the interfaces of worker nodes. While the root endpoint can be accessed without valid credentials (and returns a 404 error message), all other endpoints require valid credentials.

From this listing we can see that Kubernetes runs a wide array of services—and that they vary quite a lot in terms of their utility, visibility, and authentication requirements. From a security standpoint, any exposed API should be considered, but it's generally best to focus on services that expose significant functionality to remote hosts. In the case of Kubernetes, etcd, kube-apiserver, and the kubelet fall into that category. The others become more relevant if your cluster is not using the default setup (i.e., configured to expose them more widely to remote hosts).

Managed Kubernetes

APIs are exposed differently in managed Kubernetes services compared to unmanaged environments. One major difference is that the service provider hosts the control plane nodes and typically does not expose all of the services running on it to remote connections. Cluster operators and attackers should not have access to APIs exposed to localhost interfaces on the control plane nodes. This architecture generally improves security for cluster operators as they can rely on the cloud service provider (CSP) to handle the secure configuration of the control plane components, but it's still important to understand how these components are exposed by the CSP.

Control plane

In the three major managed Kubernetes environments—Amazon Elastic Kubernetes Service (EKS), Azure Kubernetes Service (AKS), and Google Kubernetes Engine (GKE)—kube-apiserver is the main control plane service that is exposed. The default for these (and many other managed Kubernetes services) is to expose this service directly to the internet, although each of them provides options to restrict access to it (see further details in the documentation for EKS, AKS, and GKE). By default, AKS requires authentication for all access to kube-apiserver, with GKE and EKS allowing anonymous access only to a small number of endpoints including /version and /healthz

Services like Censys make it possible to track exposed kube-apiserver instances on the Internet. At a basic level, the presence of kubernetes.default.svc.cluster.local in certificate names can give a good indicator of exposed Kubernetes API servers. At the time of writing, we can see just over 1.5 million results for potentially exposed servers.

In addition to this, many managed Kubernetes services expose the /version API endpoint. This provides more precise targeting of an exposed Kubernetes service, but it misses some instances (for example, AKS) that expose the API server, but do not expose endpoints (including /version) by default. This information is again correlated by internet search engines, and, at the time of writing, approximately 1.3 million hosts expose this endpoint.

Worker nodes

Depending on the exact version of the managed Kubernetes service they're using, cluster operators may have access to the worker nodes and therefore will need to understand the potential exposure of Kubernetes services running on those nodes.

In addition to the main Kubernetes service, all of the managed distributions have supporting services for things like networking, logging, and monitoring, which often expose ports for metrics and health checks.

Azure Kubernetes Service (AKS)

In a standard AKS cluster, the kubelet and kube-proxy components operate as expected (i.e., in the same way as they would in an unmanaged Kubernetes environment). From the perspective of API security, the kubelet is configured in the same way as it was on kubeadm. The main port (10250/TCP) listens on all interfaces and requires authentication for any paths apart from the root (which returns a 404 message) and the healthz port (10248/TCP), which listens on localhost and allows access without authentication.

Kube-proxy, however, is configured slightly differently. Port 10249/TCP, which serves up metrics and configuration information, is listening on all interfaces and allows access without authentication. This presents a potential risk, as an attacker who can access the port over the network can retrieve that configuration.

Amazon Elastic Kubernetes Service (EKS)

In an EKS cluster, the standard kubelet and kube-proxy services run on worker nodes. In terms of the interfaces they listen on and authentication they do (or don't) require, EKS is configured in the same way as AKS.

Google Kubernetes Engine (GKE)

In a GKE cluster running dataplane v2 the services running do not use kube-proxy. As such, the kube-proxy ports are not present. However, the kubelet ports are present and in the same networking configuration as an unmanaged cluster, and additionally GKE exposes the read-only Kubelet port (10255/TCP) which provides access to information about running pods, without authentication.

Securing Kubernetes APIs

When we're thinking about how to secure the Kubernetes APIs, the main questions are network exposure and encryption. We will cover authentication and authorization in future blog posts in this series.

An exposed API could be subject to attack either via a vulnerability in the code of the running service, through an attacker gaining access to valid credentials for the service (if they're required), or via unauthenticated access where the cluster has been misconfigured to allow access to sensitive data without authentication. Additionally, if the API transfers unencrypted data, it may be sniffed by an attacker.

To help improve Kubernetes security, APIs should only be visible to users who need to access them, and they should encrypt any sensitive data that they send or receive.

Kube-apiserver is the component that requires the most attention, as it generally has the highest number of services and users communicating with it, and, as a result, the greatest exposure to attack.

If you're using a managed Kubernetes service, you should consider configuring the cluster to only allow access from specific IP address ranges, or making the cluster endpoint private so it can't be accessed from the general internet. For unmanaged clusters, restricting access with network firewalls is an effective way to reduce its exposure.

Access to other control plane components (etcd, kube-scheduler, and kube-controller-manager) is handled by the cloud service provider in managed Kubernetes, so it falls out of the control of the cluster operator.

With unmanaged Kubernetes, you need to consider what services actually need to connect to these components. As coordination for the cluster goes through kube-apiserver, the only services that need to communicate with these APIs are the kube-apiserver and any supporting services, such as monitoring tools that gather metrics directly from each component. Using network or host firewalls to restrict access to these services—or having them listen on localhost interfaces only—can reduce their exposure to attack and reduce the impact of compromised credentials.

The two worker node components, kubelet and kube-proxy, require access to kube-apiserver for their operation. Kube-apiserver also needs to be able to initiate connections to the kubelet. In addition to this, some monitoring and logging services will communicate directly with these components to gather information about their performance—and, in the case of the kubelet, to collect information about the containers running on the cluster node. As such, it is recommended to restrict network access to these components, while still allowing specific services (e.g., monitoring and logging systems) to query them as needed .

Conclusion

Kubernetes has a number of components that expose APIs for their operation. In general, their default settings provide reasonable security, but there are definitely some areas that could benefit from additional hardening, which should help reduce the cluster's susceptibility to attack. In the next part of our series, we'll look at how Kubernetes handles authentication to each of these services.