In the previous post in this series, we looked at how Kubernetes network security is implemented. For this post, we’ll explore a topic that often gets less attention but still has serious implications for cluster security: public key infrastructure (PKI).

Before we examine some of the implementation details, it's important to note the scope of this post. We’ll focus on Kubernetes system components and their use of PKI to secure connections between those systems. We won't cover the PKI setups used as part of service mesh implementations to secure traffic between pods that run in Kubernetes clusters. That's a different area with different considerations.

Kubernetes PKI implementation

So, why do we use PKI in Kubernetes at all? The goals from a security standpoint are to authenticate connections between Kubernetes system components and to encrypt the data transferred between those components to protect it from traffic sniffing or adversary-in-the-middle (AITM) attacks.

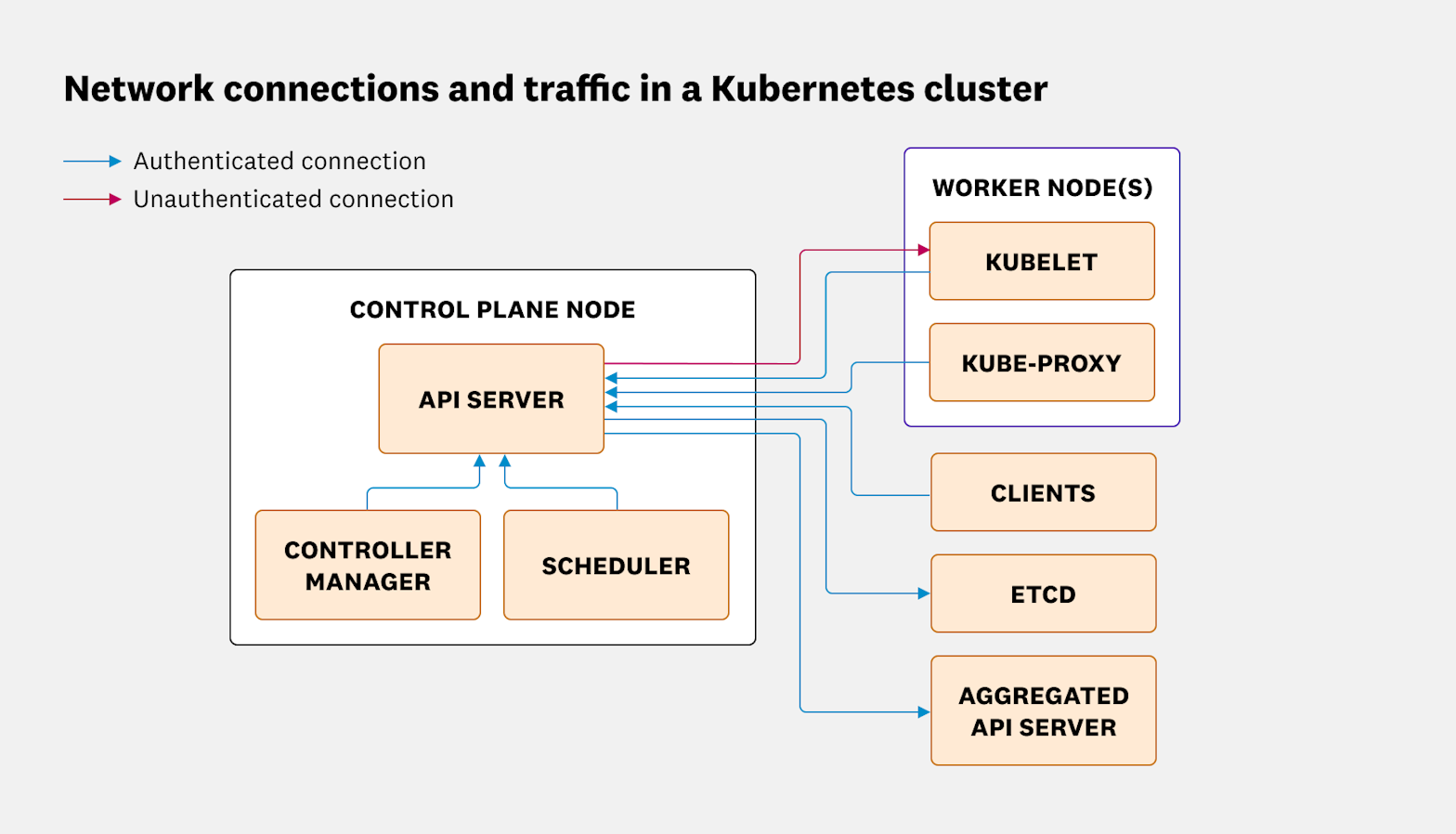

With that in mind, we need to think about what connections need to be secured. Kubernetes has many different components that communicate with each other, and each component requires TLS connections to be established.

For the purpose of this post, we'll stick to a standard kubeadm cluster to allow us to focus on core Kubernetes components and standard configurations. If we examine the components in a cluster, our map of TLS connections looks a bit like this.

Most of the connections are authenticated TLS connections where the client checks the validity of the server component to ensure that they're connecting to a valid system. There is one exception, which is the connection from the API server to the kubelet. This connection can be authenticated, but it isn't authenticated by default. As a result, it’s open to AITM attacks, as noted in the Kubernetes documentation.

Kubernetes CAs and certificates

With all of these different connections to secure, there are many certificates to manage. Slightly complicating matters, there are also multiple certificate authorities (CAs) in use. Generally, Kubernetes uses internally managed CAs for keys. So, if you connect to a cluster by using tools like curl or a web browser, you'll get warnings about untrusted certificates.

We can see all the certificates that are created by looking in /etc/kubernetes/pki/ on a Kubernetes control plane node created via kind:

.

|-- apiserver-etcd-client.crt

|-- apiserver-etcd-client.key

|-- apiserver-kubelet-client.crt

|-- apiserver-kubelet-client.key

|-- apiserver.crt

|-- apiserver.key

|-- ca.crt

|-- ca.key

|-- etcd

| |-- ca.crt

| |-- ca.key

| |-- healthcheck-client.crt

| |-- healthcheck-client.key

| |-- peer.crt

| |-- peer.key

| |-- server.crt

| `-- server.key

|-- front-proxy-ca.crt

|-- front-proxy-ca.key

|-- front-proxy-client.crt

|-- front-proxy-client.key

|-- sa.key

|-- sa.pub

Our list of certificates includes three distinct CAs, which is an unusual configuration for a single system. We need these CAs to be separate because of security requirements and the use of client certificates for authentication.

The main Kubernetes CA handles the signing of most of the certificates in the cluster (for example, signing certificates used by kubelets to connect to the API server). You can manually sign certificates by using openssl or similar tools, but that activity requires interactive access to the control plane host. It's also possible to use the main Kubernetes CA to sign certificates remotely via the Certificate Signing Request API.

In addition to the main CA, we have two additional CAs. The first is the CA for etcd. This CA is used to secure the connection between the API server and etcd. The reason that a separate CA is needed is that in the configuration used by Kubernetes, etcd will provide full access to its database to any certificate signed by the CA it trusts. If etcd used the main CA, any attacker who gained access to a valid client certificate would be able to fully control the cluster data store!

The final CA is the requestheader-client CA, which uses the front-proxy-ca.crt file. This CA is used for aggregation requests (where aggregated API servers are in use). A separate CA is required in these situations due to the risk of conflicts if the same CA is used.

In a worker node in our cluster, only the kubelet requires PKI configuration files, which are stored in /var/lib/kubelet/pki. The PEM file is used by the kubelet when the kubelet acts as a client to authenticate itself to the API server, and the crt and key files are used by the kubelet API. In a default deployment of kubeadm, this crt file is a self-signed certificate, which leaves clients that connect to the kubelet (like the API server) at risk of sniffing and AITM attacks.

.

|-- kubelet-client-2025-06-27-08-58-52.pem

|-- kubelet-client-current.pem -> /var/lib/kubelet/pki/kubelet-client-2025-06-27-08-58-52.pem

|-- kubelet.crt

|-- kubelet.key

The Kubernetes documentation site explains the certificates and their intended uses.

Securing Kubernetes certificates

From a security perspective, there are a couple of key considerations to keep in mind when it comes to Kubernetes' use of PKI and certificates. The first one is that it's very important to secure the private key files, in particular the ca.key, which is the private key for the main Kubernetes CA. An attacker who gets access to that file will have persistent cluster-admin level access because they can use the file to create new privileged credentials.

To protect key files, it’s important to avoid committing them to source code repositories. It’s also critical to carefully control backups of key files. The way you handle this situation can depend on the Kubernetes distribution that you use. For example, Rancher Kubernetes Engine 1 (RKE1) stores the private keys in a configmap, which allows users with access to that configmap to have full access to the cluster. So, it's important to be aware of any distribution-specific requirements.

Client certificate security concerns

While we're discussing PKI security and Kubernetes, it's important to address security challenges that come from using client certificates for authentication. The main problem in Kubernetes with this practice is that there is no method of revoking individual certificates. The reason is that Kubernetes doesn't support certificate revocation lists (CRLs) or protocols such as the Online Certificate Status Protocol (OCSP).

Therefore, if an attacker gets access to a valid client certificate, the only way to completely remove the attacker’s access is to rotate the CA keys and then reissue all the certificates in the cluster. These activities can be complex and disruptive, depending on your cluster architecture. For this reason, use of client certificates should be minimized. Ordinary users should not use client certificates for authentication.

Conclusion

Kubernetes system components use PKI to secure their communications and provide authentication to their APIs. It's important to understand how the different Kubernetes CAs are used and to protect the sensitive files that those CAs use.

In the next part of this series, we’ll explore Kubernetes secrets management and discuss good practices for handling sensitive credentials in your clusters.